Master Thesis

We offer several master thesis projects to the students following one of the master’s programs at the University of Namur. Those projects can cover one or more topics related to the research done at the SNAIL team or explore new directions. It is also possible to propose your own project related to our ongoing research. If you think you have a great idea, do not hesitate to contact us, but make sure you have clearly identified the research aspect and novelty of your proposal.

The project can be conducted at the computer science faculty, in collaboration with the members of the team, at another Belgian organization (industry, research center, university, …) with which we have an ongoing collaboration, or abroad at another university in our network.

If you study at a different university and you would like to do a research internship in the context of one of our projects, you should ask your own university supervisor to contact us. We have limited places available but are always interested in new research opportunities.

Master Thesis Projects

Current and Past Projects

Researchers and practitioners have developed automated test case generators for various languages and platforms, each with different strengths. Comparing these tools requires large-scale empirical evaluations, which demand significant setup and analysis. The JUnit Generation benchmarking infrastructure (JUGE) automates the production and comparison of Java unit tests for multiple purposes, aiming to streamline evaluation, support knowledge transfer, and standardize practices. This master’s thesis will use the existing JUGE infrastructure to conduct an extensive empirical comparison of unit test generators for Java, and provide the resulting dataset to the research community.

The use of Large Language Models (LLMs) for automated test generation offers promising results but remains constrained by issues like hallucinations and prompt size limitations. This thesis investigates the integration of a graph-based Retrieval Augmented Generation (RAG) technique to enhance test generation within TestSpark, an IntelliJ IDEA plugin. We introduce GRACE-TG (Graph-Retrieved Augmented Contextual Enhancement for Test Generation), which constructs a graph of code entities using the Program Structure Interface (PSI) and rank nodes via a Personalized Weighted PageRank algorithm. This enables a precise selection of relevant context for LLMs while significantly reducing input size. Evaluation across 147 real-world Java bugs demonstrates that GRACE-TG reduces prompt sizes by over 97% compared to the current version of TestSpark, with equivalent or improved test coverage. These results suggest that graph-based retrieval can be a good candidate to improve test generation with LLMs.

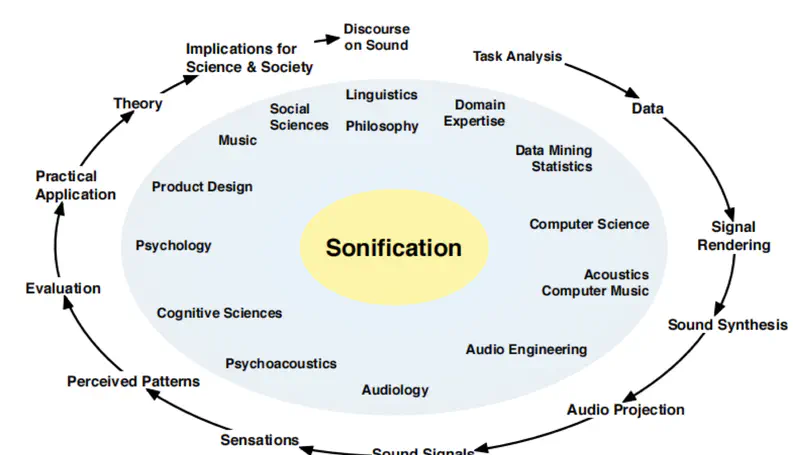

This thesis explores the integration of sonification and gamification in development environments in order to reduce the cognitive load on developers and improve their user experience. A state-of-the-art review was conducted based on scientific literature in various fields such as cognitive load, sonification, and gamification. Subsequently, a tool called EchoCode was developed to address the issue. It is an extension for Visual Studio Code that integrates the concepts of sonification and gamification. This extension offers three main features : personalized audio feedback associated with keyboard shortcuts, audio feedback that adapts according to the execution of a program (failure or success), and an integrated To-Do List with two operating modes to structure tasks in a software development context. This approach aims to relieve the visual channel, support working memory, and maintain optimal concentration.

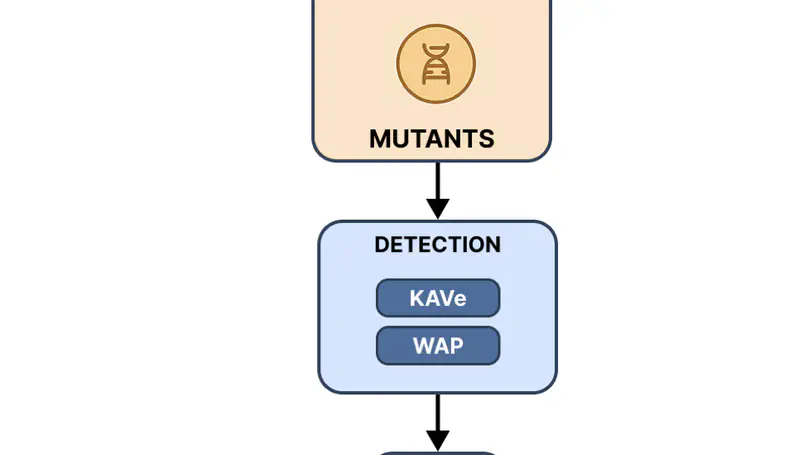

Looking after bugs and vulnerabilities is one of the most important tasks in computer science, especially in the context of web applications. There are many techniques to detect and prevent these issues, one of the most widely used being mutation testing. However, creating mutants manually is a time-consuming and error-prone pro- cess. To address this, we perform a combination of static analysis and an LLM to automatically generate mutants. In this study, we compare the performance of an LLM in producing mutants based on three different static analysis tools: KAVe, WAP, and the LLM itself. Our results show significant variability between tools. Mutants produced using traditional static analysers vary heavily depending on the type of vulnerability, and tend to perform better when tools are combined. When it comes to the LLM, the quality of mutants is more consistent across different vulnerabilities, and the overall code coverage is significantly higher than traditional approaches. On the other hand, LLM-generated mutants have a higher success rate in passing initial verification, but often contain syntactic or semantic errors in the code. These findings suggest that LLMs are a promising addition to automated vulnerability testing workflows, especially when used in conjunction with static analysis tools. However, further refinement is needed to reduce the generation of incorrect or invalid code and to better align with real-world exploitability.

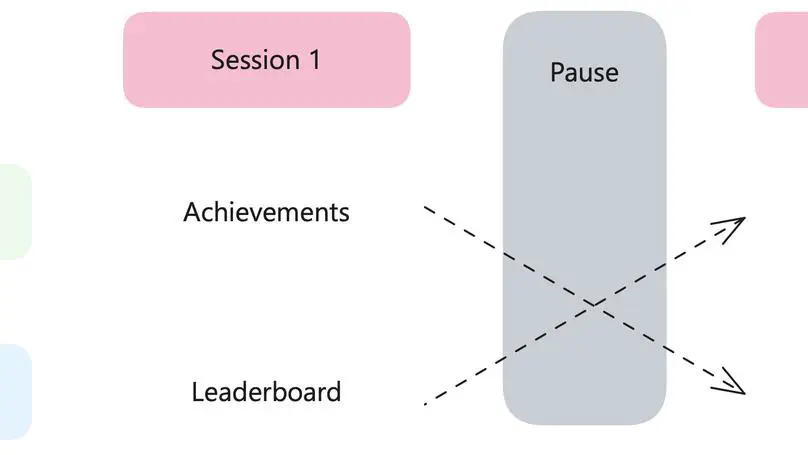

The practice of software testing is struggling to become widespread in the software development industry. This observation also applies to the academic world, where students often devote little energy to this activity, due to lack of motivation or time. To address these issues, this study explores the use of gamification as a lever of engagement in software testing. Following a state-of-the-art review of testing techniques and gamification principles applied to software development, an existing IntelliJ plugin was reused and enhanced. Initially centered on a system of achievements, this plugin was supplemented by a leaderboard, enabling a comparison of these two approaches. Achievements are badges, here represented by trophies, awarded when the user reaches certain levels of progress in the plugin. A leaderboard, on the other hand, is a table that ranks the various participants according to the points they have earned. The aim is to determine which mode favors student involvement, while having better performance in test writing.

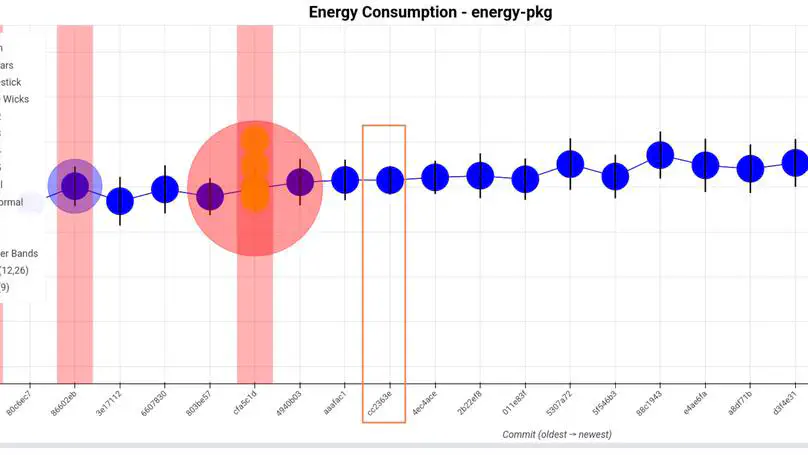

Green software engineering is emerging as a crucial response to the rising energy impact of digital technologies, which may soon rival aviation and shipping combined. While several tools aim to help developers track energy consumption and detect regressions, they all have their own limitations. This motivated the development of EnergyTrackr, a fully modular and automated tool designed to detect statistically significant energy changes.

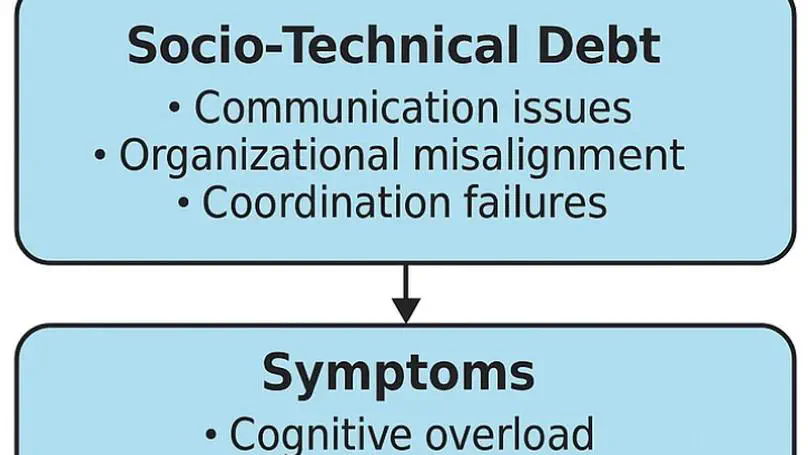

This thesis explores the impact of socio-technical debt on developer well-being. It combines two approaches: a quantitative approach (a structured questionnaire and a perceived stress scale) and a qualitative approach (a thematic analysis of open-ended responses). This study highlights existing links between perceived debt, social tensions, and workplace stress.

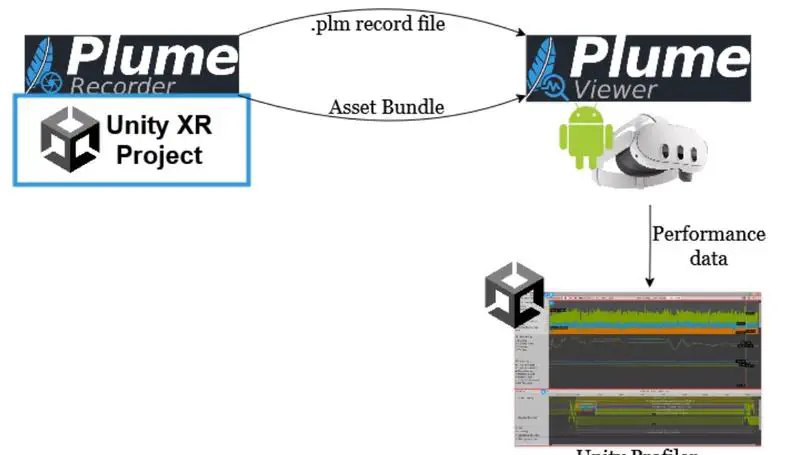

Software engineering for mixed reality headsets is still in its early genesis even though the required hardware for it has been available for several decades. Existing methods to streamline development are still limited and developers often find themselves doing things manually. Mixed Reality system testing, for example, are often done by hand by developers who have to wear the headsets and do the testing as if they were an end user. This is a significant time sink in the development process.

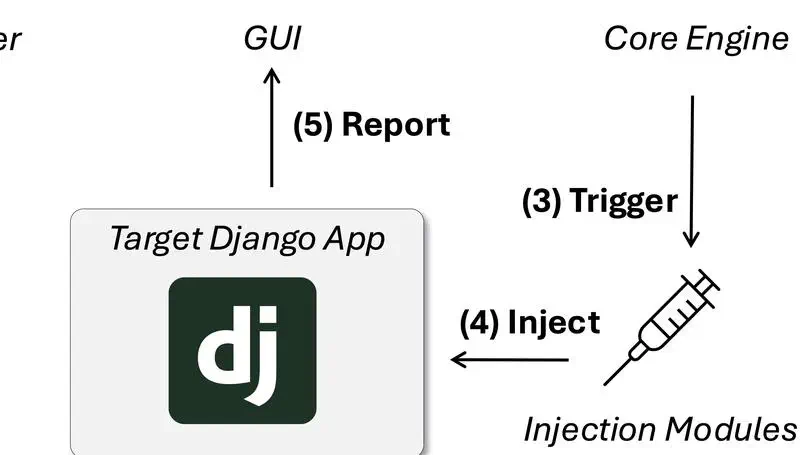

This thesis presents the development of a configurable tool that automatically injects web application vulnerabilities into existing Django codebases to support cybersecurity education. The primary goal is to enhance hands-on learning by allowing educators and students to engage with realistic, production-like environments that incorporate well-known security flaws, specifically drawn from the OWASP Top Ten 2021. Instead of generating synthetic applications, the tool modifies authentic Django projects, ensuring pedagogical relevance and structural fidelity.

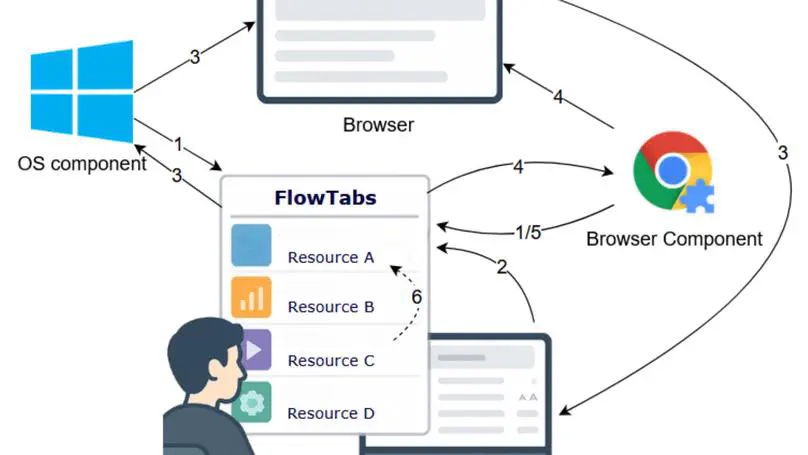

This master thesis explores the design and validation of a context management tool integrated into a development environment. Developers constantly use various resources (documentation, terminal, development tools, etc.), which forces them to frequently switch between their development environment and these resources to meet their needs. This often leads to interruptions, distractions, and consequently, a drop in productivity. To address this issue, the study proposes a solution in the form of an extension for the Visual Studio Code IDE, called FlowTabs. This extension brings resources together into a unified interface and uses a relevance algorithm that adapts to the developer’s behavior to suggest the most appropriate resources. User testing has shown that it integrates well into the development environment, offering a satisfactory developer experience, cognitive comfort and effective resource management. This work thus provides an original context management solution, directly embedded in a development environment.

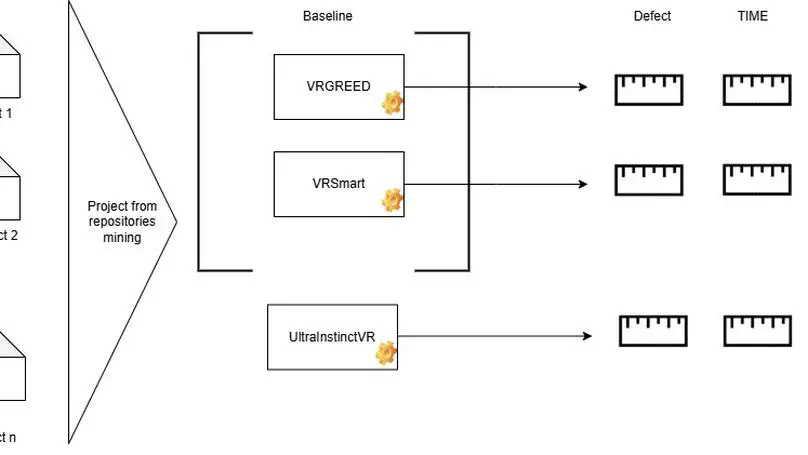

Virtual Reality (VR) is increasingly recognized as a technology with substantial commercial potential, progressively integrated into a wide array of everyday applications and supported by an expanding ecosystem of immersive devices. Despite this growth, the long-term adoption and maintenance of VR applications remain limited, particularly due to the lack of formalized software engineering practices adapted to the unique characteristics of VR environments. Among these challenges, the absence of effective, systematic, and reproducible tools for interaction testing constitutes a significant barrier to ensuring application quality and user experience.

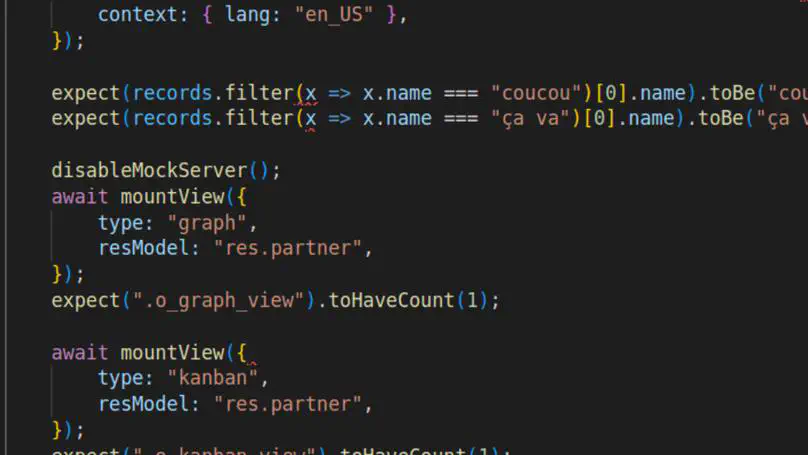

Odoo, a company offering business management services through a modular web application, has continuously expanded the scope and complexity of its software over the years. This growing complexity raises challenges in terms of testing performance, readability, and maintainability. While existing tools such as Tours and Hoot provide solutions for system-level and unit-level testing respectively, a gap remains between the two. This thesis introduces a new approach called Hoot-Intégration, which extends the Hoot testing framework to support integration testing by enabling real server interactions in a lightweight environment. The proposed solution is implemented, validated, and compared against existing tests strategies from Odoo, showing notable gains in execution speed and test clarity. Although some technical limitations remain, the approach is effective and opens the door to future testing improvements in large and scaling modular systems like Odoo.

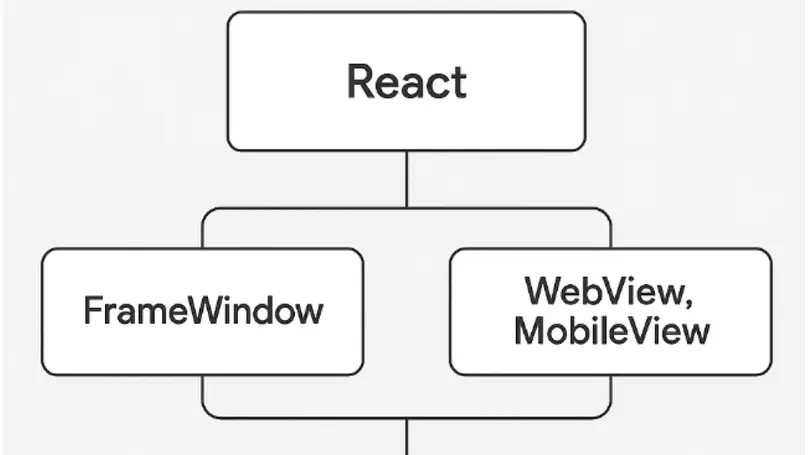

The evolution of digital usage has led to a rapid diversification of application runtime environments, ranging from traditional web browsers to immersive extended reality (XR) devices, as well as mobile and desktop platforms. This plurality of environments creates a growing need for solutions that can deliver a consistent user experience while minimizing the development and maintenance efforts associated with multi-platform deployment. However, current approaches often rely on separate implementations for each environment, leading to code duplication, visual and functional inconsistencies, and increased complexity in managing software evolution. These limitations hinder portability and slow down the time-to-market of multi-platform applications.

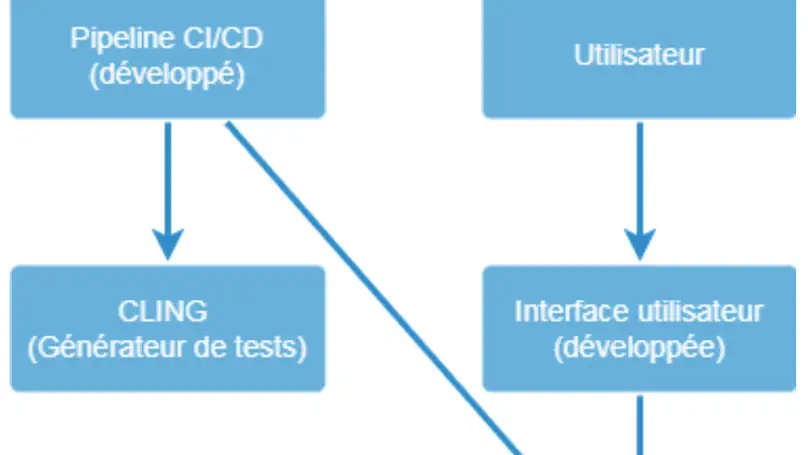

This thesis investigates the impacts and challenges of integrating CLING, a test generator for Java, into CI/CD (Continuous Integration/Continuous Deployment) pipelines. In a context where test automation is essential to ensure software quality, CLING generates integration tests automatically. Integrating this tool into a Docker environment standardizes and isolates the environments, thus ensuring consistent test execution. The work addresses the technical challenges encountered during this integration, such as configuring and automating necessary operations. It also describes the development of the API used to manage the data generated by CLING. The results show an improvement in the efficiency of the development process, particularly through the reduction of manual interventions. Finally, the thesis offers recommendations for developers and DevOps engineers looking to optimize the integration of test generators into their CI/CD pipelines.

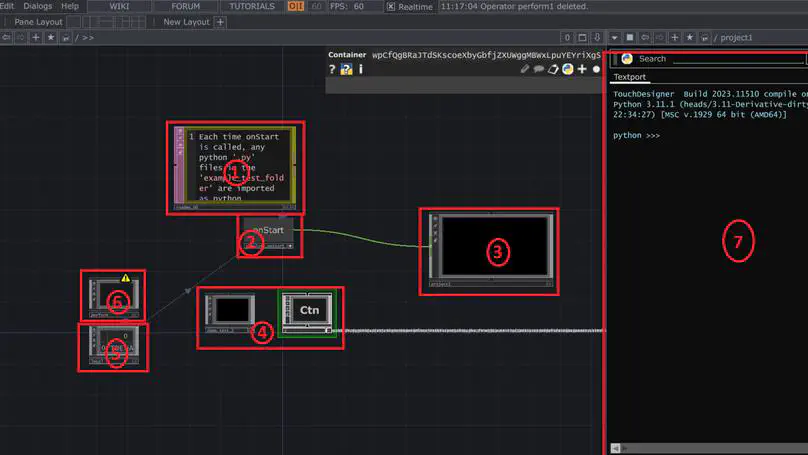

As creative coding in interactive art becomes increasingly popular in the digital art world, the need to test work to ensure that it matches the artist’s expectations is essential. The problem is that the programs supporting these works can behave unexpectedly or cause problems. Some studies have shown that is possible to test these projects manually, but the use of automated tests has been little studied. The research aims to explain to what extent it is possible to implement automated tests for functional and performance testing in interactive installation projects using hybrid development tools. To answer this question, we performed a case study on the Wall of fame project in TouchDesigner. Observations on automated test experiments and an interview were made. The results of the observations showed that it was possible to carry out functional and performance tests with limitations on the reliability of the data. Difficulties were identified : TouchDesigner dependencies, operator limitations, user interface interactions, lack of native test environments, performance test limitations and maintenance difficulties. Solutions have also been found to resolve these issues.

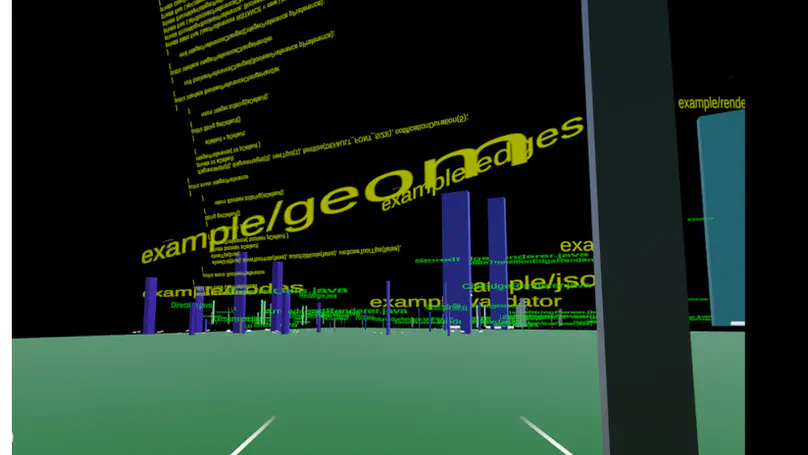

Learning to program, and especially understanding it, is a difficult task for newcomers. For this rea- son, aids are provided, such as IDEs, which give them tools to help them avoid syntax and/or semantic errors, depending on the programming languages used. depending on the programming languages used. However, these aids are not always sufficient to understand the written code, and more often than not, they fail to to understand the errors generated and their causes. For this purpose, code comprehension tools are available to help visualize the code. Some advances have even made it possible to use this through virtual reality. That’s why, with the advent of MR, a draft code visualization application has been proposed. This solution, called codeMR, makes it possible to represent code in 3 dimensions following the city’s paradigm, codeCity. To test its viability, an experiment was carried out with 10 people to see if it could a future for understanding code through the mixed reality. The results showed that the solution had the capabilities to help code comprehension. However, improvements to the application are still required to ensure optimal use in this context.

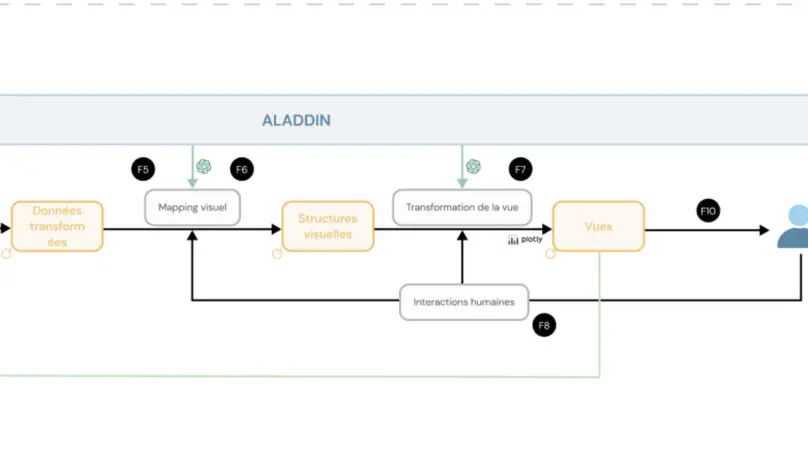

This dissertation explores the impact of using natural language in the visualisation of open datasets, focusing on the design and evaluation of Aladdin, a system based on the DSR approach. Aladdin uses advanced natural language processing techniques to transform text queries into interactive data visualisations.

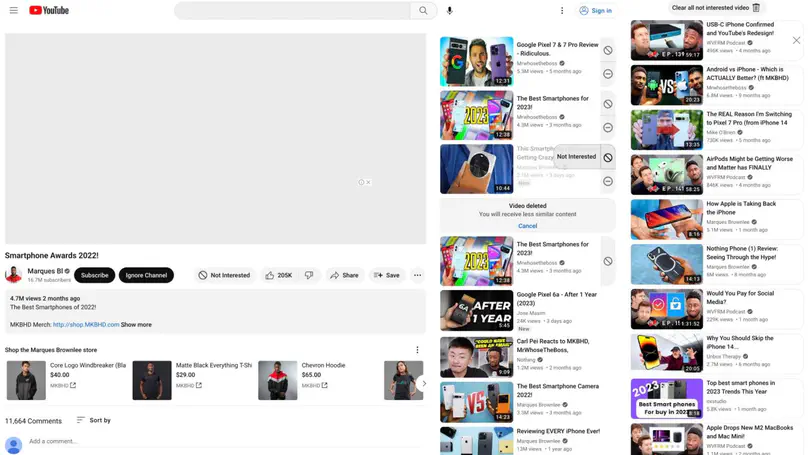

Amid growing concern over filter bubbles and content diversity, this thesis explores the impact of feedback mechanisms on user experience with YouTube’s recommendation algorithm. The study examines how increased user control can influence their interactions with the algorithm. Based on user interviews, personas were created to understand user behaviors and expectations. A Chrome extension was developed to allow users to report errors in their recommendation feed. Results indicate that this mechanism enhances user satisfaction and a sense of control, though some limitations suggest areas for future improvements. The study also proposes a methodology to evaluate contextual thematic diversity on YouTube, paving the way for further research into recommendation diversity and self-actualization systems.

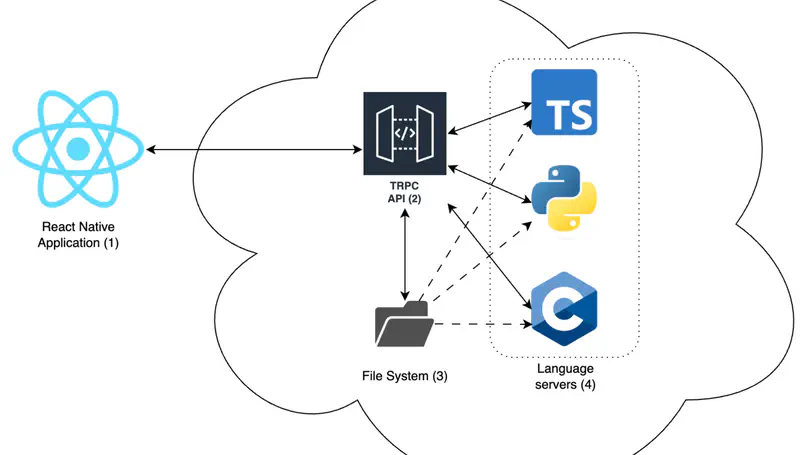

This master thesis investigates how the Language Server Protocol (LSP) can be used to develop a nomad and ergonomic code editor. Mobile devices popularity has significantly increased in the past decade, strengthening the transformation of desktop solutions to mobile ones. However, code editing activities, traditionally carried out on a computer, have not yet found real alternatives to provide a suitable development environment and allow multilanguage support on mobile devices. Previous works focus on finding interaction solution to allow better code editing productivity, mainly adapting the code editor to one single programming language. By integrating the use of language servers through the LSP, we develop new design and interaction solutions to allow multilanguage support in a single mobile code editor. In this thesis, we present a prototype code editor combining interaction solutions found in the literature with LSP functionalities and evaluate it in terms of productivity and usability. This work aims to provide an alternative solution to the traditional desktop development environment on mobile devices, addressing the technological shifts and transforming the way developers may be coding in the future.

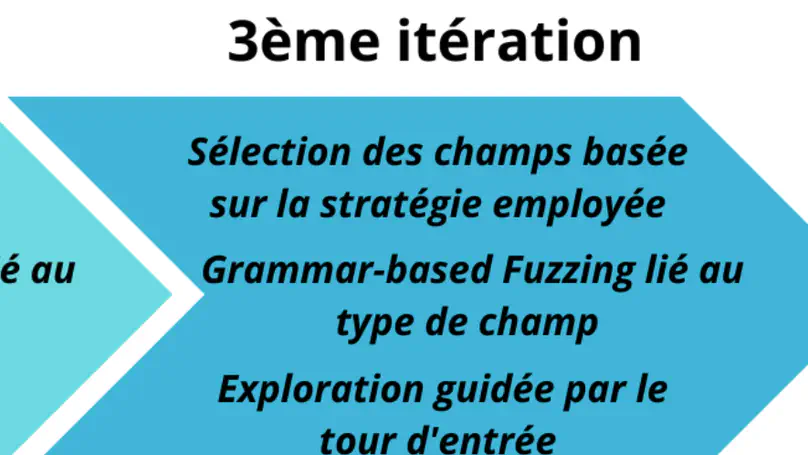

Since many years, Odoo, a company providing business management services, is constantly expanding its scope and developing the complexity of its software, a web application. In response to that complexity, the introduction of automated testing techniques seems to be the next evolution of the testing tools already available to them. In the past, other tools for automatically testing web interfaces have been created, but often with limitations. This thesis explores the techniques that can be applied to implement fuzzing on the Odoo software web interface. It is shown that some methods do not seem applicable at present, while others work very well. A viable method will be proposed and implemented, and different configurations of the method will be evaluated. Ultimately, it will be shown that some weaknesses are present in the proposed method, but that future work in this direction can be done.

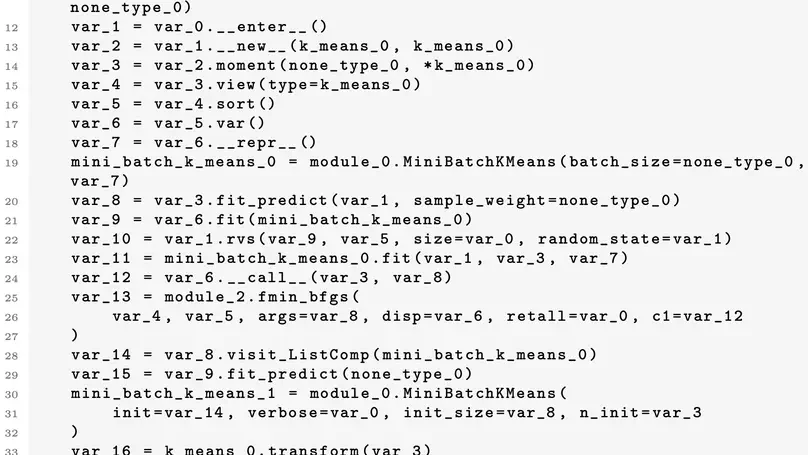

The field of automated test case generation has grown considerably in recent years to reduce software testing costs and find bugs. However, the techniques for automatically generating test cases for machine learning libraries still produce low-quality tests and papers on the subject tend to work in Java, whereas the machine learning community tends to work in Python. Some papers have attempted to explain the causes of these poor-quality tests and to make it possible to generate tests in Python automatically, but they are still fairly recent, and therefore, no study has yet attempted to improve these test cases in Python. In this thesis, we introduce 2 improvements for Pynguin, an automated test case generation tool for Python, to generate better test cases for machine learning libraries using structured input data and to manage better crashes from C-extension modules. Based on a set of 7 modules, we will show that our approach has made it possible to cover lines of code unreachable with the traditional approach and to generate error-revealing test cases. We expect our approach to serve as a starting point for integrating testers’ knowledge of input data of programs more easily into automated test case generation tools and creating tools to find more bugs that cause crashes.

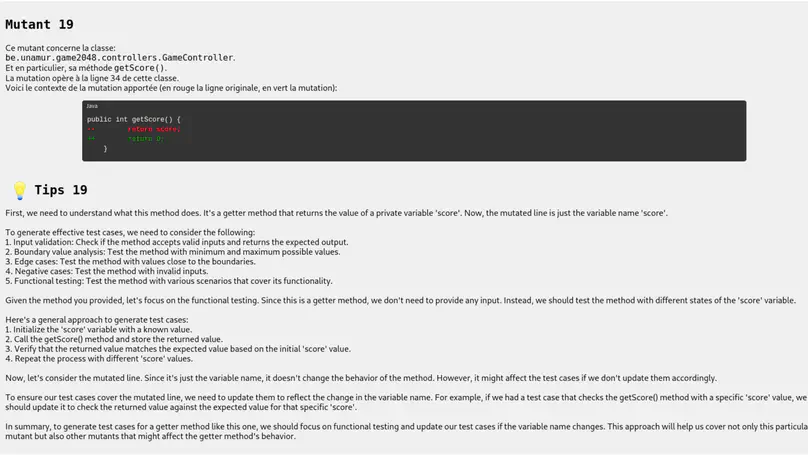

The widespread digitalization of society and the increasing complexity of software make it essential to develop high-quality software testing suites. In recent years, several techniques for learning software testing have been developed, including techniques based on mutation testing. At the same time, the recent performance of language models in both text comprehension and generation, as well as code generation, makes them potential candidates for assisting students in learning how to develop tests. To confirm this, an experiment was carried out with students with little experience in software testing, comparing the results obtained by some students using a report from a classic mutation testing tool and a report augmented with hints generated by a language model. The results seem promising since the augmented reports improved the mutation score and mutant coverage within the group more generally than the other reports. In addition, the augmented reports seem to have been most effective in testing methods for modifying and retrieving private variable values.

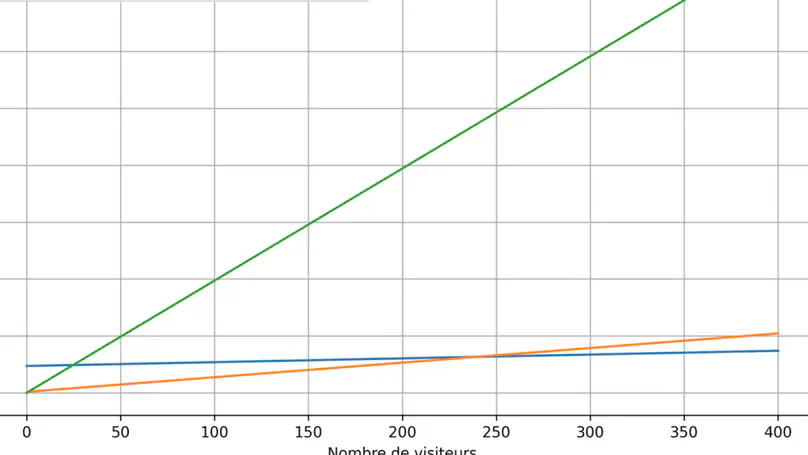

Energy efficiency in computing is an important subject that is increasingly being addressed by researchers and developers. Nowadays, the majority of websites are built using the Wordpress CMS, while other developers prefer to use more secure and energy-efficient site generators. This study focuses on the server-side energy consumption of these two methods of creating websites. A detailed analysis of the results will make it possible to identify borderline cases and suggest recommendations on the best technology to use, depending on the type of project.

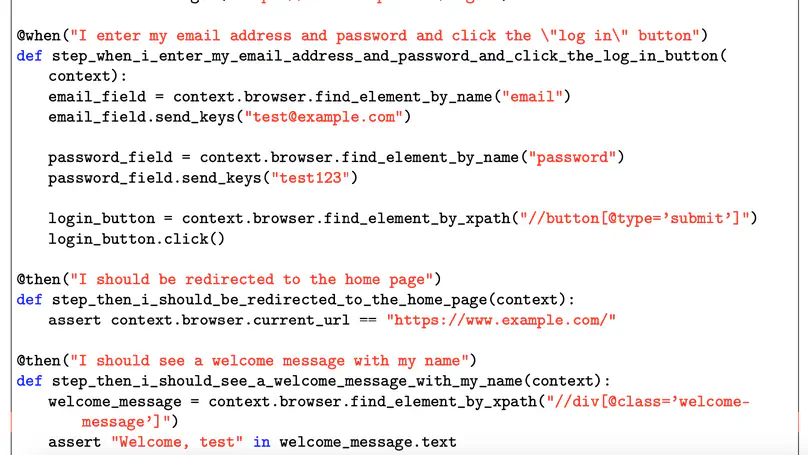

Software development faces persistent challenges in terms of maintainability and efficiency, and this is driving the ongoing search for innovative approaches. Agile methodologies, in particular Behaviour-Driven Development (BDD), have gained ground in society thanks to their ability to promote responsiveness to change and communication between stakeholders. However, as with many methods, the use of BDD can lead to mainte- nance costs and productivity problems. To meet these challenges, this research investigates the adaptation of advanced automatic data generation techniques, in particular SELF-INSTRUCT, to augment BDD datasets.

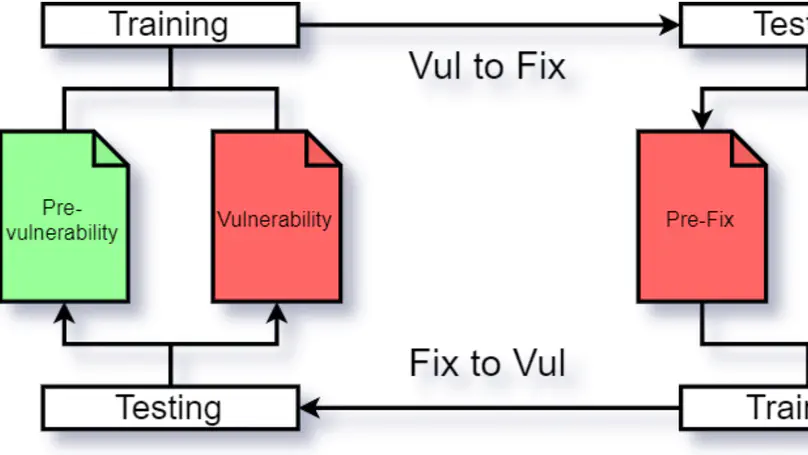

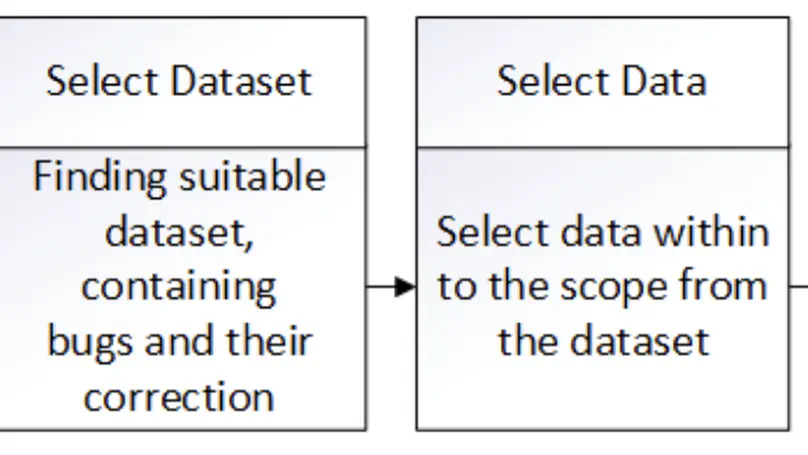

Multiple techniques exist to find vulnerabilities in code, such as static analysis and machine learning. Although machine learning techniques are promising, they need to learn from a large quantity of examples. Since there is not such large quantity of data for vulnerable code, vulnerability injection techniques have been developed to create them. Both vulnerability prediction and injection techniques based on machine learning usually use the same kind of data, thus pairs of vulnerable code, just before the fix, and their fixed version. However, using the fixed version is not realistic, as the vulnerability has been introduced on a different version of the code that may be way different from the fixed version. Therefore, we suggest the use of pairs of code that has introduced the vulnerability and its previous version. Indeed, this is more realistic, but this is only relevant if machine learning techniques can properly learn from it and the patterns learned are significantly different than with the usual method. To make sure of this, we trained vulnerability prediction models for both kind of data and compared their performance. Our analysis showed a model trained on pairs of vulnerable code and their fixed version is unable to predict vulnerabilities from the vulnerability introducing versions. The same goes for the opposite, despite both models are able to properly learn from their data and detect vulnerabilities on similar data. Therefore, we conclude that the use of vulnerability introducing codes for machine learning training is more relevant than the fixed versions.

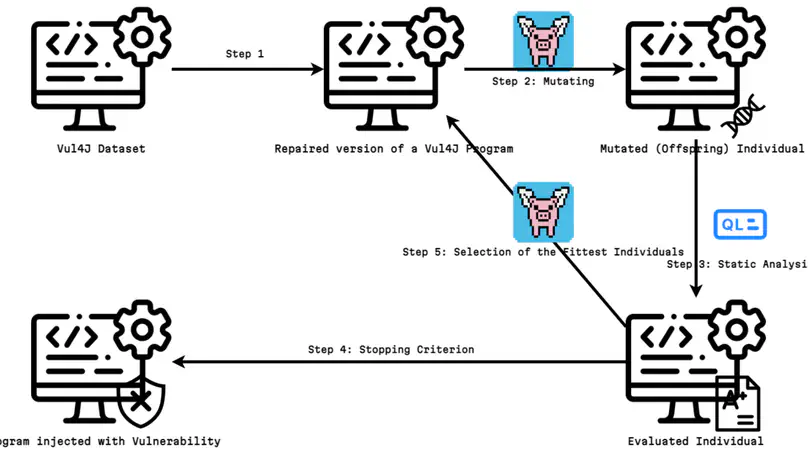

This thesis explores the idea of applying genetic improvement in the aim of injecting vulnerabilities into programs. Generating vulnerabilities automatically in this manner would allow creating datasets of vulnerable programs. This would, in turn, help training machine-learning models to detect vulnerabilities more efficiently. This idea was put to the test by implementing VulGr, a modified version of the framework dedicated to genetic improvement named PyGGi. VulGr itself uses CodeQL, a static code analyser, offering a new approach to statical detection of vulnerabilities. VulGr’s end goal was to use CodeQL to inject vulnerabilities into programs of the Vul4J dataset. This experiment proved unsuccessful, CodeQL lacking accuracy and being too time-consuming to produce concrete results in an acceptable time span (less than 72 hours). However, the general approach and VulGr still retain their relevancy for future uses as CodeQL is an ongoing community effort promising new updates fixing the issues mentioned.

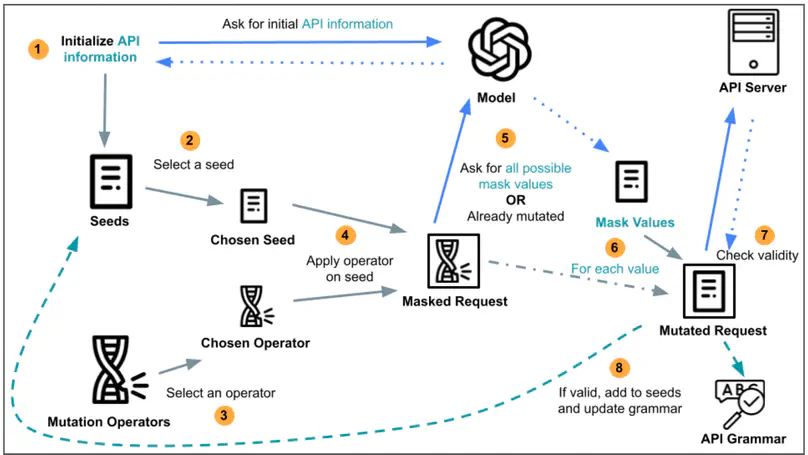

Application Programming Interfaces, known as APIs, are increasingly popular in modern web applications. With APIs, users around the world are able to access a plethora of data contained in numerous server databases. To understand the workings of an API, a formal documentation is required. This documentation is also required by API testing tools, aimed at improving the reliability of APIs. However, as writing API documentations can be time-consuming, API developers often overlook the process, resulting in unavailable, incomplete or informal API documentations. Recent Large Language Model technologies such as ChatGPT have displayed exceptionally efficient capabilities at automating tasks, disposing of data trained on billions of resources across the web. Thus, such capabilities could be utilized for the purpose of generating API documentations. Therefore, the Master’s Thesis proposes the first approach Leveraging Large Language Models to Automatically Infer RESTful API Specifications. Preliminary strategies are explored, leading to the implementation of a tool entitled MutGPT. The intent of MutGPT is to discover API features by generating and modifying valid API requests, with the help of Large Language Models. Experimental results demonstrate that MutGPT is capable of sufficiently inferring the specification of the tested APIs, with an average route discovery rate of 82.49% and an average parameter discovery rate of 75.10%. Additionally, MutGPT was capable of discovering 2 undocumented and valid routes of a tested API, which has been confirmed by the relevant developers. Overall, this Master’s Thesis uncovers 2 new contributions:

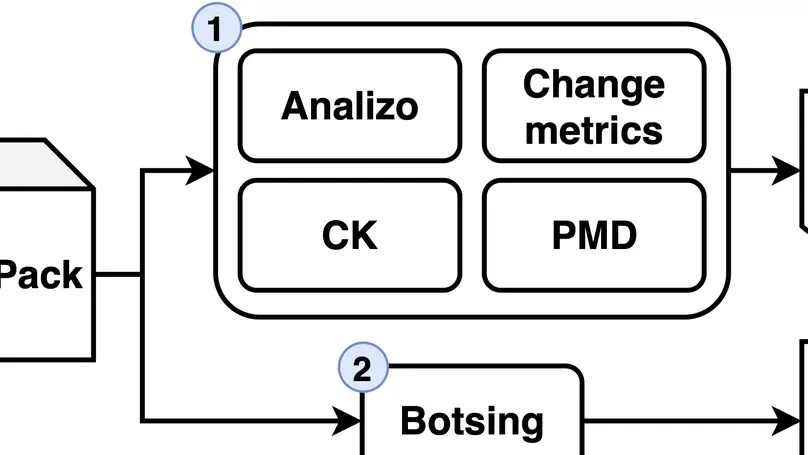

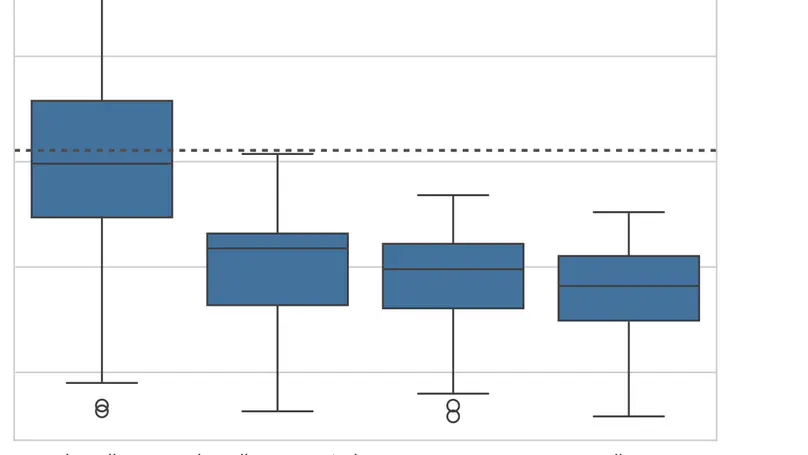

Code Smells have been studied for more than 20 years now. They are used to describe a design aw in a program intuitively. In this study, we wish to identify the impact of some of these Code Smells. And, more specifically, their potential impact on Testability. To do this, we will study the state of the research on both Code Smells and Testability. Using those studies, we will define a scope of parameters to dene the two concepts. With that information, we will analyse the statistical distribution of our samples and try to understand the relationship between Code Smells and Testability in a corpus of Java projects.

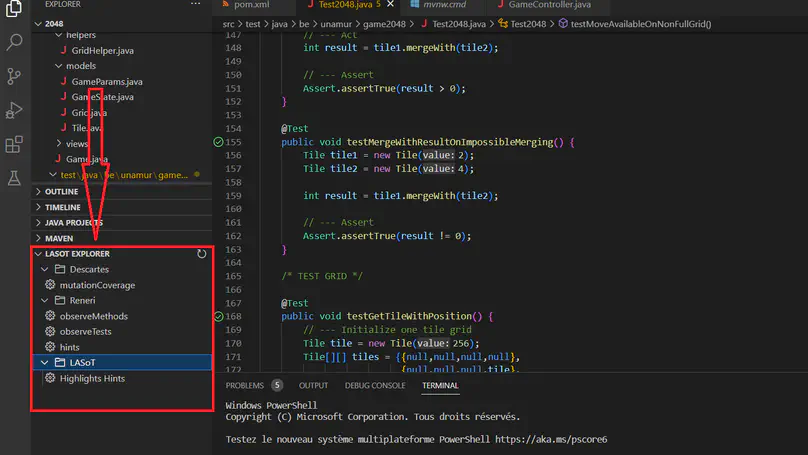

Learning software testing is a neglected subject in computer science courses. Over the years, methods and tools have appeared to provide educational support for this learning. Mutation testing is a technique used to evaluate the effectiveness of test suites. Recently, a variant called extreme mutation testing that reduces computational and time costs has emerged. Descartes, an extreme mutation engine was developed. With the support of a plugin extension called Reneri, Descartes can generate a report providing information to the developer on potential reasons why mutants remain undetected. In this thesis, an extension of Visual Studio Code has been developed in order to incorporate the information generated by Descartes and Reneri. The purpose of the experiment is to assess whether the inclusion of this data can help master’s students improve their test assertions. The results showed that this information integrated into an editor was well received by the students and that it guided them towards a refinement of their suite of tests.

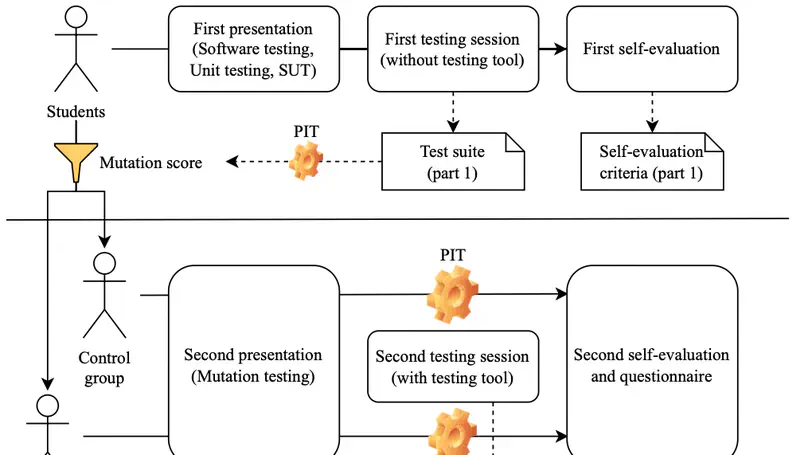

Although software testing is critical in software engineering, studies have shown a significant gap between students’ knowledge of software testing and the industry’s needs, hinting at the need to explore novel approaches to teach software testing. Among them, classical mutation testing has already proven to be effective in helping students. We hypothesise that extreme mutation testing could be more effective by introducing more obvious mutants to kill. In order to study this question, we organised an experiment with two undergraduate classes comparing the usage of two tools, one applying classical mutation testing, and the other one applying extreme mutation testing. The results contradicted our hypothesis. Indeed, students with access to the classic mutation testing tool obtained a better mutation score, while the others seem to have mostly covered more code. Finally, we have published and anonymised the students’ test suites in adherence to best open-science practices, and we have developed guidance based on previous evaluations and our own results.

Many applications are developed with a lot of different purposes and can provide quality output. Nevertheless, crashes still happen. Many techniques such as unit testing, peer-reviewing, or crash reproduction are being researched to improve quality by reducing crashes. This thesis contributes to the fast-evolving field of research on crash reproduction tools. These tools seek better reproduction with minimum information as input while delivering correct outputs in various scenarios. Different approaches have previously been tested to gather input-output data, also called benchmarks, but they often take time and manual e↵ort to be usable. The research documented in this thesis endeavours to synthesize crashes using mutation testing to serve as input for crash reproduction tools.