Abstract

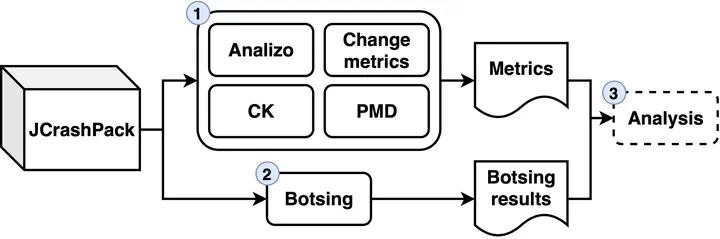

This study presents the initial step towards a thorough analysis of the difficulty to reproduce a crash using search-based crash reproduction. Traditionally, code size and complexity are considered representative indicators of the difficulty for search-based approaches, like search-based unit test generation, to generate tests. However, unlike unit test generation, crash reproduction does not seek to cover a set of behaviors but instead to generate one or more tests exercising a specific behavior reproducing a given crash. In this context, there is no guarantee that the indicators used for unit testing are still valid for crash reproduction. In this study, we seek to identify such indicators by considering various code metrics, code smells, and change metrics. We report our effort to collect those metrics for JCRASHPACK, a state-of-the-art crash reproduction benchmark, and an initial assessment by considering metrics individually. Our results show that although JCRASHPACK is larger than benchmarks used in previous studies, additional crashes should be added to improve its diversity and representativeness, and that no individual metric can be used to characterize the difficulty to reproduce a crash.