Automatic test cases generation for virtual reality application using a scenario engine to define oracle

Benchmarking evaluation protocol

Benchmarking evaluation protocolVirtual Reality (VR) is increasingly recognized as a technology with substantial commercial potential, progressively integrated into a wide array of everyday applications and supported by an expanding ecosystem of immersive devices. Despite this growth, the long-term adoption and maintenance of VR applications remain limited, particularly due to the lack of formalized software engineering practices adapted to the unique characteristics of VR environments. Among these challenges, the absence of effective, systematic, and reproducible tools for interaction testing constitutes a significant barrier to ensuring application quality and user experience.

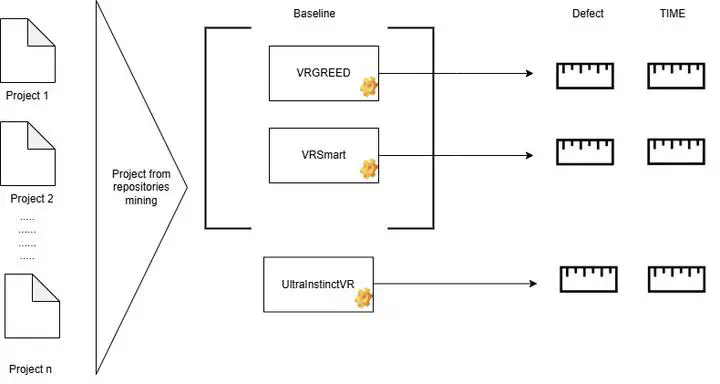

To address this issue, we introduce UltraInstinctVR, a novel model-based software testing framework specifically designed for VR. UltraInstinctVR automates the generation of interaction test cases based on predefined models, thereby supporting developers in validating VR interactions. UltraInstinctVR is evaluated through a two-part approach: (i) a performance comparison with state-of-the-art autonomous VR testing frameworks, (ii) a user study examining usability and developer satisfaction.

The results of our evaluation demonstrate that the proposed framework outperforms existing automated defect detection tools, particularly in the early stages of execution. Regarding the user evaluation, the framework is perceived as highly useful in assisting with defect identification within components. It also received positive assessments from participants in terms of efficiency and reliability.